Use Data To Build Better Batches

EP Editorial Staff | March 1, 2023

Advanced analytics applications empower SMEs to quickly and intuitively isolate batch data and determine causes of run-to-run variabilities.

By Synjen Marrocco, Seeq

Throughout the process industries, operational decisions are too often based on subjective judgments, leading to inefficiencies at best, or equipment and personnel safety issues at worst. Given these risks and the automated tools now available to inform operations, decision making on the fly is becoming less acceptable.

In many plant settings, users have become familiar with an advanced analytics application of one kind or another. Most likely, they’ve used the technology to generate insights from continuous process data, helping them optimize operational efficiency. Though equally beneficial, these applications and the aid they provide is less understood in batch processes.

Batch vs. continuous processing

The discrete, repeated steps of a batch process fundamentally set it apart from its continuous counterpart, requiring different tactics and metrics to troubleshoot issues and measure performance. With batch processing, repetition is everywhere—valves open and close; agitators start and stop; and vessels heat up, cool down, fill up, and empty. As the process repeats, data patterns emerge. Acting on those patterns can eliminate variability and streamline the process. The result is improved operational excellence and overall equipment effectiveness.

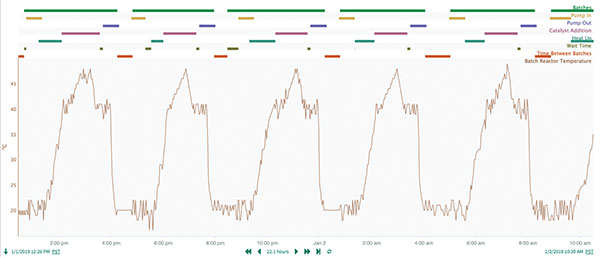

Advanced analytics applications enable subject-matter experts (SMEs) to identify and isolate periods of interest, based on batch data patterns. Some of these patterns are expected and planned within a batch, such as a reactor heat up or cool down. However, while they may follow the same pattern, these process cycles are not identical. Some are faster or slower, or more or less extreme.

By analyzing these variables, SMEs can detect and diagnose process irregularities. Periods of interest or events can be configured to describe any set of process conditions with specific entry and exit criteria. Once configured, they are automatically generated across all historical and real-time data.

For example, SMEs can view periods of interest end-to-end or overlaid, enabling rapid identification of variance among batches. Part of an application’s value stems from the ease and speed with which it generates these types of insights. This is a key differentiator between advanced analytics and spreadsheets.

In the Seeq analysis system, batch and phase capsules can be used to automatically identify batch operations using data patterns. Courtesy Seeq

Integrating data sources

In addition to providing intuitive analysis, advanced analytics applications seamlessly integrate disparate data sources, whether they are housed on-premises or in the cloud. Connecting multiple data types to a single system saves time and provides opportunities for analysis that were not previously possible.

This type of integration, for instance, empowers a user to simultaneously visualize how lab data results vary with process parameters. An SME may suspect high temperature is detrimental to final product quality, as identified by lab analysis, in a batch process. The batch history data can be used to quickly isolate each batch, then an SME can create a signal displaying the maximum temperature reached—or the amount of time spent above a specified temperature. The findings from each batch can then be overlaid to identify correlations between extreme temperature and unsatisfactory product quality to confirm or negate the suspicion.

Additionally, there is often lag associated with lab data, with sampling and subsequent analysis taking hours. This lag plagues SMEs as it becomes difficult to immediately tell which period of time-series data should be associated with a specific outcome observed in lab data. Advanced analytics applications ease this burden by shifting lab data signals by the typical process lag time using a formula tool. Once synchronized, SMEs can more easily discern patterns in process conditions and their impact on product quality.

With Seeq’s Capsule Time View, profiles from multiple batches can be overlaid for easy identification of differences in catalyst charge rate. Courtesy Seeq

Breaking up the batches

The golden batch highlights the best-case scenario for SMEs and decision makers, if all steps of a batch run optimally. Functionality within advanced analytics applications makes defining a golden batch quick and intuitive.

Batches often have a key parameter signal. That signal can be used to define each batch and sub-phase. By using this key parameter—or multiple parameters in some cases—each batch can be quickly contextualized with a defined start and end condition.

This level of detail allows SMEs to dive deeper into the causes of batch variability and quickly address each root cause. For example, common sources of variability among batches may include temperature or flow limitations based on the process load during a batch, and analysis quickly exposes these anomalies.

Given the quantifiable outputs that can be provided by an advanced-analytics application, this type of information can be used to create a quick and powerful project justification for process improvement or for procedural changes that are aimed at alleviating issues that create excessive batch duration. Once defined, these calculations can be automatically applied to past and future batches. This enables SMEs to compile months or even years of historical data from previous batches and production campaigns for further analysis and comparison.

Batches can also be sorted by duration using a histogram tool, demonstrating how each batch stacks up against the historical average duration, which helps measure standard deviation.

Additional insight informants

Temperature and flow profiles also yield critical insights. The discrete nature of batch processing means each subsequent batch is analogous to a unique trial of an experiment. Analyzing how temperature and flow profiles vary, for example before and after an operator-initiated change, provides important information related to the success of an adjustment.

SMEs can use this information to ask the right questions and determine the cause of variability within the process. Does the timing or speed of catalyst introduction cause some batches to heat up faster than others? Does an impurity cause some batches to dry slower than others?

These types of insights are created by identifying a cause-and-effect relationship between two types of information. Even when a previous relationship is suspected, advanced analytics can help SMEs verify their hunches and quickly quantify process impact in units of time, flow, or mass—producing quantified results.

Contextualizing data using advanced analytics applications improves SME efficiency, enhances productivity, and speeds time to insight. Advanced analytics applications are powerful diagnostic and quantification tools that empower SMEs to determine the cause and magnitude of batch variability and confidently move forward with improvement projects. EP

Synjen Marrocco is an Analytics Engineer at Seeq Corp., Seattle (seeq.com), where he helps process-manufacturing groups maximize value from their data.

View Comments